A large part of the work on my live performances has involved trying to find ways to make laptop performance more engaging for audiences, to overcome the performer-stuck-behind-laptop phenomenon.

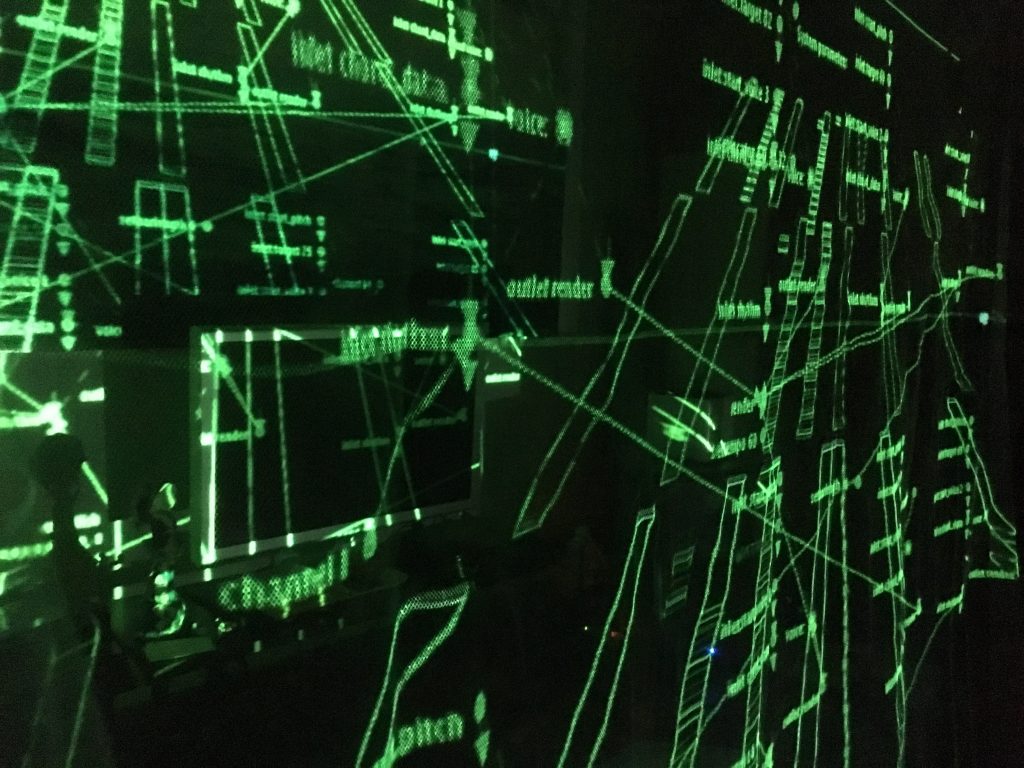

My goal has been to design a generative music environment which functions as an effective live-coding interface, while simultaneously providing aesthetically interesting visuals suitable for projection during performances. The aim is to create a relationship between audience and performer similar to seeing an instrumental performer, where manipulation of the instrument is the visual experience; where the interface is the aesthetic.

While this approach has been somewhat successful, there are still many ideas I’d like to implement to achieve this goal more fully.

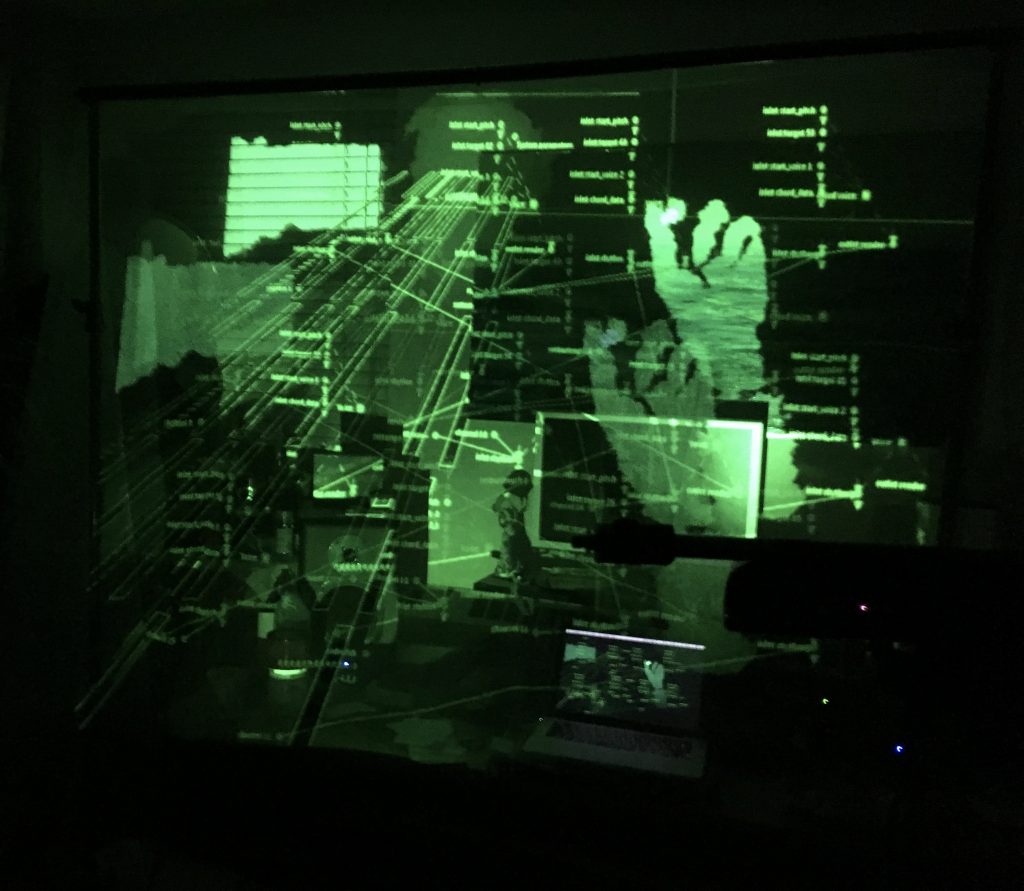

Recently, I’ve been exploring a couple of ways to improve the visual aspects of my performances. Firstly, by using a Kinect motion sensor to provide gestural control of onscreen elements and realtime visuals in the form of a depth-image silhouette embodying the performer within the interface. And secondly, by projecting onto transparent fabrics to be placed at the front of the stage between audience and performer/equipment.

Work so far, pictured below in my studio, has been going exceptionally well and I cannot wait to try this out in an actual performance!